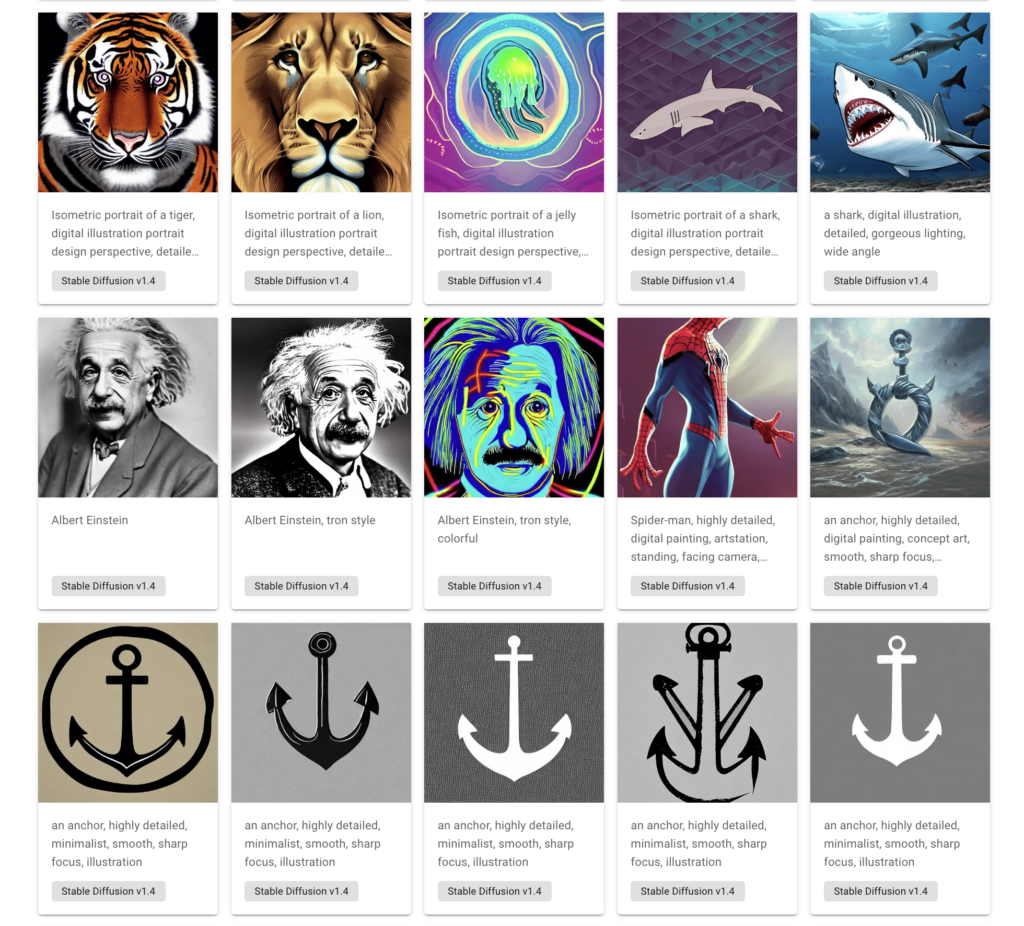

The world of AI-generated images has exploded. If you’ve never heard of Dall-E, Midjourney or Stable Diffusion then I’ll give you a quick explanation. You can now generate images from text. Artificially trained models understand a wide range of knowledge and can mix and match them for some wild results.

Here at Anchor Hosting, I spend a lot of my time automating tasks through bash scripts and PHP. The openness of Stable Diffusion got the best of me. I had to see what I could create. I wrote a script to endlessly generate images on my M2 MacBook Air.

The biggest contribution to the world of image generation comes from Stable Diffusion.

If you have a powerful enough computer you can download the latest Stable Diffusion model, v1.4, and generate images for free completely offline. Their entire model is amazingly 4GB. It’s mind-blowing that you can describe anything imaginable in written text to a 4GB AI model and have it spit out a visual image. This seems completely impossible but it’s true. For a good overview, I’d recommend listening to the Changelog’s episode Stable Diffusion breaks the internet.

Running the image generation heats up my laptop quite a bit. To keep things free, cost-wise, I connected a laptop to a Jackery battery charged by solar panels and let it run uninterrupted overnight.

If you’ve managed to install Stable Diffusion you can run something like “prompt here” and it will create an image. This is amazing however isn’t a great workflow. It would be nice to be able to save those text prompts with the images themselves. Also, it would be great if it just dumped the images into a viewable, searchable galley. So that’s what I built.

The script is powered by 2 files stable-diffusion.sh and stable-diffusion.php. These work as wrappers around the underlying python scripts/txt2img.py script which does the actual image generation. The PHP handles stores the image details into a data.json file, which powers the website. See here for the website code. The wrapping shell script does a few smart things like renaming images and converting to webp format.

stable-diffusion.sh

#!/usr/bin/env bash

cd /Users/austin/Documents/stable-diffusion

echo "Running prompt: $1"

python scripts/txt2img.py --plms --prompt "$1" --n_samples 1 --skip_grid

cd /Users/austin/Documents/stable-diffusion/outputs/txt2img-samples/samples/

last_file=$( ls -A *.png | tail -n 1 )

check_file_size=$( du -k $last_file | cut -f 1 )

if [ $check_file_size -le 100 ]; then

echo "File appears emtpy. Removing $last_file"

rm $last_file

exit 1

fi

new_id=$( php ~/Scripts/stable-diffusion.php new "$1" "Stable Diffusion v1.4" )

echo "Converting /txt2img-samples/samples/$last_file to /images/$new_id.webp"

cwebp -q 90 mv "/Users/austin/Documents/stable-diffusion/outputs/txt2img-samples/samples/$last_file" -o "/Users/austin/Documents/stable-diffusion/outputs/images/$new_id.webp"

echo "Removing /txt2img-samples/samples/$last_file"

rm "/Users/austin/Documents/stable-diffusion/outputs/txt2img-samples/samples/$last_file"stable-diffusion.php

<?php

if ( ! empty( $argv[1] ) && $argv[1] == "new-id" ) {

if( file_exists( '/Users/austin/Documents/stable-diffusion/outputs/data.json' ) ) {

$data = file_get_contents( '/Users/austin/Documents/stable-diffusion/outputs/data.json' );

$images = json_decode( $data )->images;

usort($images, fn($a, $b) => $a->id - $b->id);

echo $images[ count( $images ) -1 ]->id + 1;

} else {

echo "1\n";

}

return;

}

if ( ! empty( $argv[1] ) && $argv[1] == "new" ) {

if( file_exists( '/Users/austin/Documents/stable-diffusion/outputs/data.json' ) ) {

$data = json_decode( file_get_contents( '/Users/austin/Documents/stable-diffusion/outputs/data.json' ) ) ;

$images = $data->images;

usort($images, fn($a, $b) => $a->id - $b->id);

$image_id = $images[ count( $images ) -1 ]->id + 1;

} else {

$images = [];

$image_id = 1;

}

$created_at = time();

$file = "/Users/austin/Documents/stable-diffusion/outputs/images/{$image_id}.webp";

if ( file_exists ( $file ) ) {

$created_at = filemtime($file);

}

$images[] = [

"id" => $image_id,

"description" => $argv[2],

"model" => $argv[3],

"created_at" => $created_at

];

$data = (object) [

"images" => $images

];

file_put_contents( '/Users/austin/Documents/stable-diffusion/outputs/data.json', json_encode( $data, JSON_PRETTY_PRINT ) );

echo "$image_id\n";

return;

}

if ( ! empty( $argv[1] ) && $argv[1] == "generate-timestamps" ) {

if( file_exists( '/Users/austin/Documents/stable-diffusion/outputs/data.json' ) ) {

$data = json_decode( file_get_contents( '/Users/austin/Documents/stable-diffusion/outputs/data.json' ) ) ;

$images = $data->images;

usort($images, fn($a, $b) => $a->id - $b->id);

$image_id = $images[ count( $images ) -1 ]->id + 1;

}

$images_updates = 0;

foreach( $images as $image ) {

if ( empty( $image->created_at ) ) {

$file = "/Users/austin/Documents/stable-diffusion/outputs/images/{$image->id}.webp";

if ( file_exists ( $file ) ) {

$image->created_at = filemtime($file);

$images_updates++;

}

}

}

$data = (object) [

"images" => $images

];

file_put_contents( '/Users/austin/Documents/stable-diffusion/outputs/data.json', json_encode( $data, JSON_PRETTY_PRINT ) );

echo "Updates timestamps for $images_updates images\n";

return;

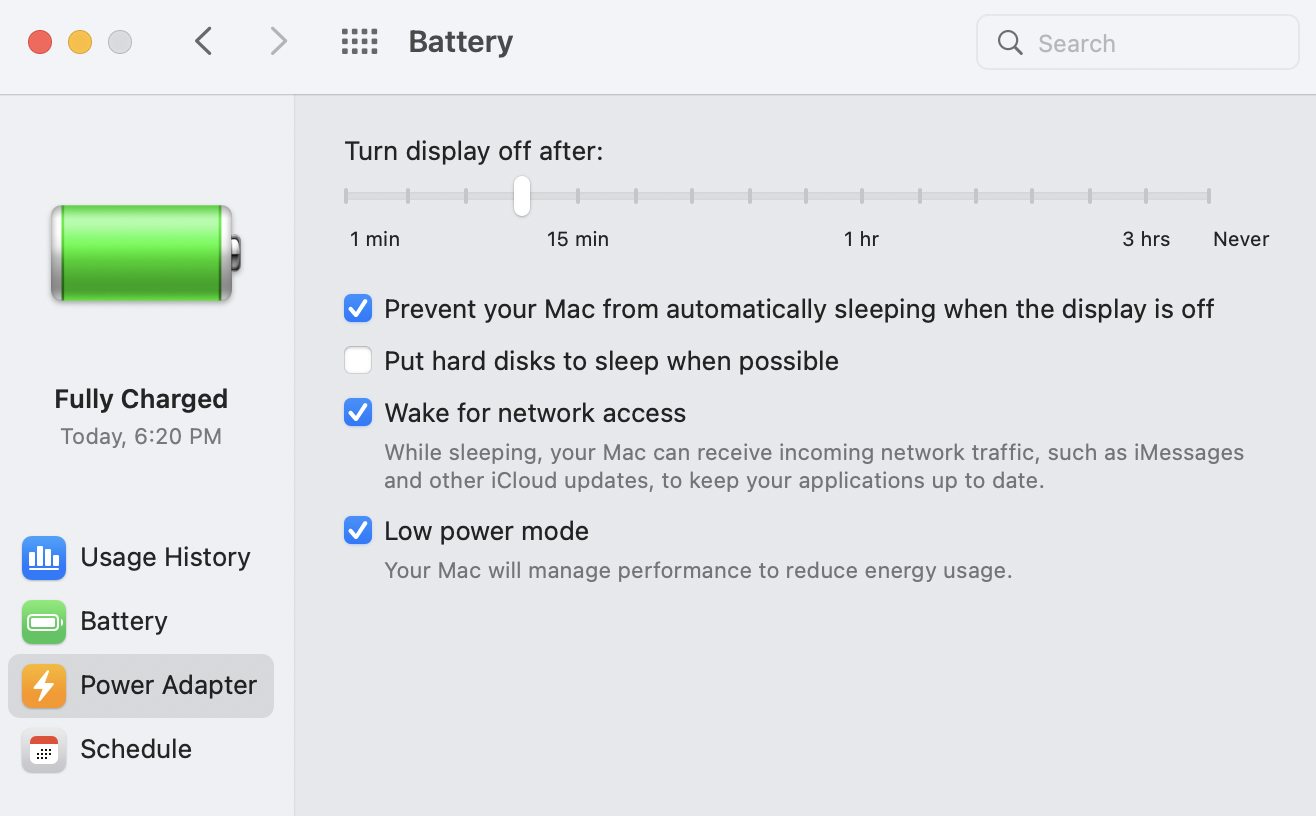

}Power usage recommendations and overnight results.

The M2 chip is very efficient when you select lower power mode. Selecting that cut the power draw from the Jackery in about half and only increased the time for each image to generate from 3 minutes to 4 and a half. My theory is that running on low power will actually pump out more images per power consumed.

- Night #1 – 8 hours with low power mode enabled generated 94 images. Jackery drained 11%.

- Night #2 – 8 hours generated 160 images. Jackery drained 19%

- Night #3 – 8 hours generated 155 images. Jackery drained 17%

- Night #4 – 8 hours generated 154 images. Jackery drained 15%

So it turns out both modes are pretty much the same power-to-images generated ratio, given enough time.

So what can you do with the script?

Well, that’s just it. Anything is possible. The point of scripting it is to explore the capabilities of the Stable Diffusion AI model itself. When you find a prompt that is consistently giving you a style or type of image that is desirable, the scripting aspects let you explore that in more detail by feeding in variances to the original prompt. Or even just running the same text prompt over and over again is sometimes interesting. Here is an example of a random.sh that endlessly create images of Anchors using a few different colors and styles.

random.sh

#!/usr/bin/env bash

colors=("80s"

"Colorful"

"Neon"

"Synthwave")

subjects=("garden"

"maze"

"safari"

"tundra"

)

camera=("Aerial View"

"Canon50"

"Cinematic"

"Close-up"

"Color Grading"

"Dramatic"

"Film Grain"

"Fisheye Lens"

"Glamor Shot"

"Golden Hour"

"HD"

"Landscape"

"Lens Flare"

"Macro"

"Polaroid"

"Photoshoot"

"Portrait"

"Studio Lighting"

"White Balance"

"Wildlife Photography")

style=("2D"

"8-bit"

"16-bit"

"Anaglyph"

"Anime"

"Art Nouveau"

"Bauhaus"

"Baroque"

"CGI"

"Cartoon"

"Comic Book"

"Concept Art"

"Constructivist"

"Cubist"

"Digital Art"

"Dadaist"

"Expressionist"

"Fantasy"

"Fauvist"

"Figurative"

"Graphic Novel"

"Geometric"

"Hard Edge Painting"

"Hydrodipped"

"Impressionistic"

"Lithography"

"Manga"

"Minimalist"

"Modern Art"

"Mosaic"

"Mural"

"Naive"

"Neoclassical"

"Photo"

"Realistic"

"Rococo"

"Romantic"

"Street Art"

"Symbolist"

"Surrealist"

"Visual Novel"

"Watercolor"

"Tron"

"Dr. Suess")

adjectives=("Epic" "Majestic")

while :

do

colors=${colors[ $(( $RANDOM % ${#colors[@]} )) ] }

camera=${camera[ $(( $RANDOM % ${#camera[@]} )) ] }

style=${style[ $(( $RANDOM % ${#style[@]} )) ] }

subject=${subjects[ $(( $RANDOM % ${#subjects[@]} )) ] }

adjectives=${adjectives[ $(( $RANDOM % ${#adjectives[@]} )) ] }

~/Scripts/stable-diffusion.sh "an anchor, $color, detailed illustration, $style"

doneTo stop an active running script press control + c. We can also randomize other aspects of the text prompt like different adjectives, subjects, camera styles, or perspectives. Or just randomize different words and concepts however you’d like.