Ever have a website’s disk usage grow over 100GBs unexpectedly? This week I did. This is how I quickly figured out where the extra data was located with the help of rclone and Kinsta’s ssh access.

Good host providers let you know when you’re over your limits.

I use Kinsta to host all of my WordPress websites. If you ever happen to go over their allocated disk space, they simply give you an overage warning. As long as you lower disk usage before the next billing date you won’t incur any extra fees nor need to upgrade your limits.

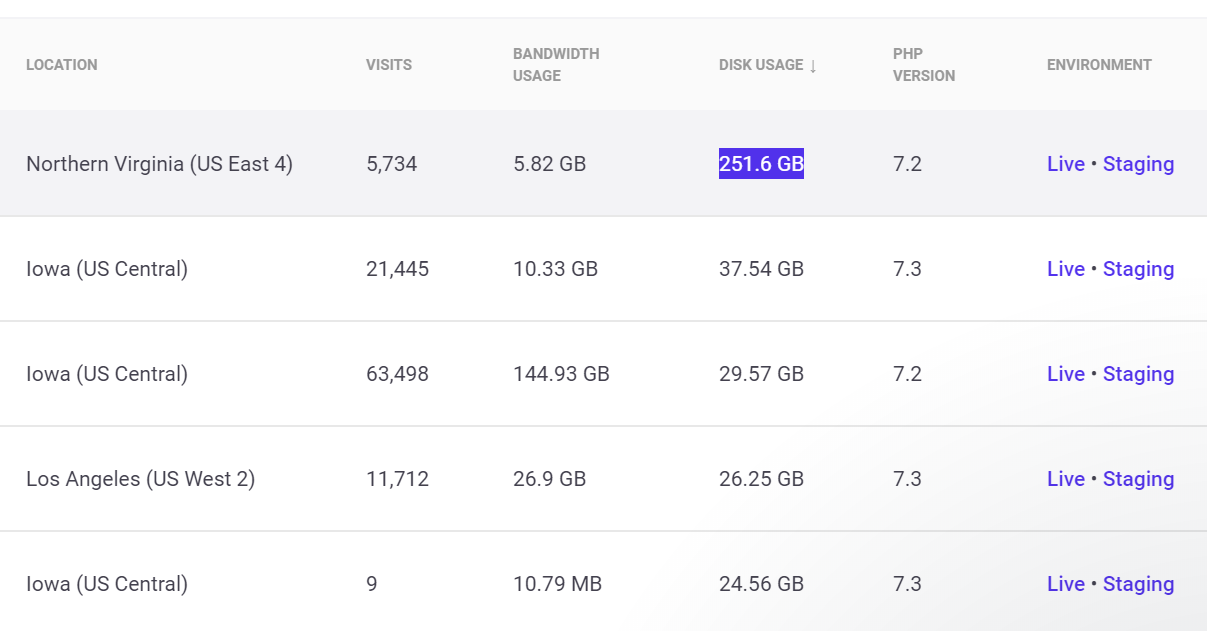

They have a fantastic interface which allows you to sort all of your sites by disk usage. Doing so I could quickly see which site was the culprit. The top site was using over 250GBs of storage. Yikes!

Next connect to the site over SSH. While you can use built-in unix commands to see folder sizes it can be a bit cumbersome to use. Instead I prefer to use Rclone’s ncdu subcommand as it gives a detailed breakdown. The following commands will download and run Rclone.

cd ~/private

wget https://downloads.rclone.org/rclone-current-linux-amd64.zip

unzip rclone-current-linux-amd64.zip

cd rclone-v*/

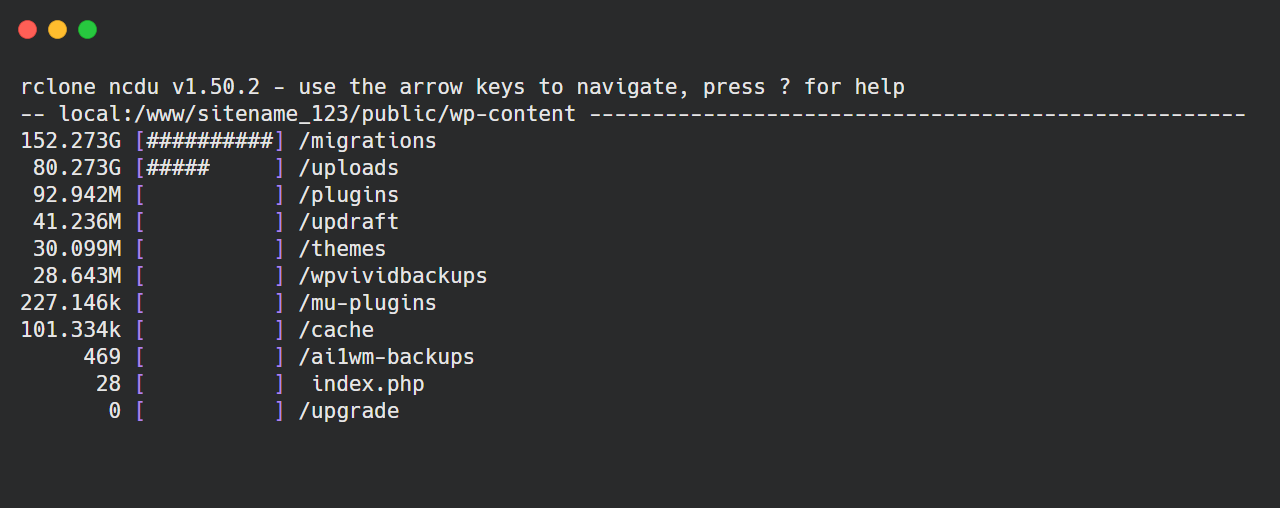

./rclone ncdu ~/publicThis will launch rclone ncdu which is really easy to use. Simply use the arrow keys to navigation around the folders. Left and right arrow keys will dig into and out of folders. You can see very quickly right where the excessive data is stored.

rclone ncdu